colab

Non-large models

We provide a colab notebook

for you to try Whisper models with sherpa-onnx step by step.

for you to try Whisper models with sherpa-onnx step by step.

Large models

For large models of whisper, please see the following colab notebook

.

It walks you step by step to try the exported large-v3 onnx model with sherpa-onnx

on CPU as well as on GPU.

.

It walks you step by step to try the exported large-v3 onnx model with sherpa-onnx

on CPU as well as on GPU.

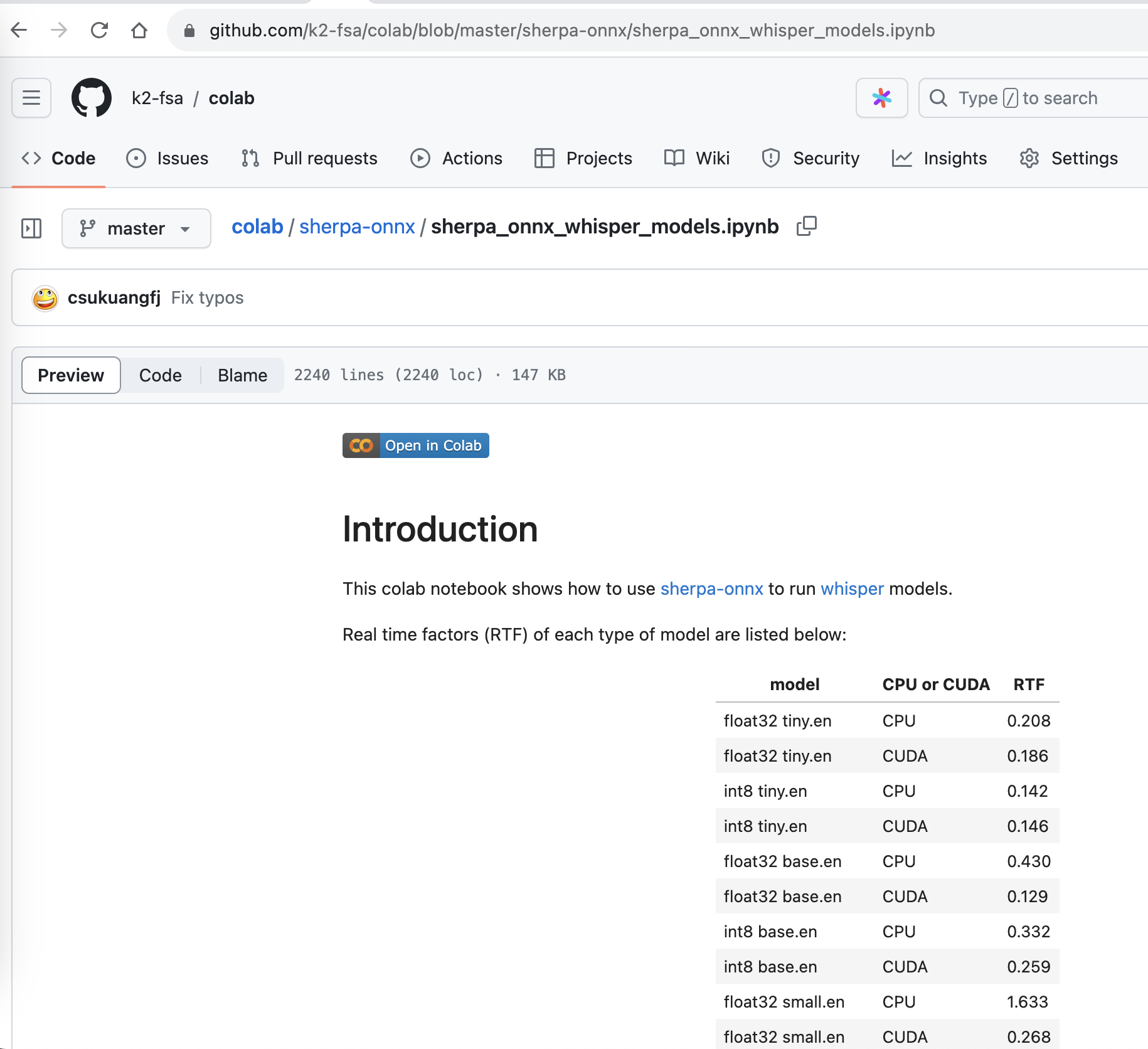

You will find the RTF on GPU (Tesla T4) is less than 1.